Cloud Engineer, aus Ostermundigen

#knowledgesharing #level 300

AWS AppConfig for Serverless Applications Demo

What is the problem?

Whether you use Terraform or the AWS CDK, different elements are often mixed in the same repository:

- AWS Lambda Function configuration (e.g., memory size, ARM vs x86 architecture)

- Related resources (e.g., IAM Role, CloudWatch Logs)

- Application code

- Application configuration (e.g., an application threshold value) passed as environment variables

Changing an application parameter passed to an AWS Lambda Function code as an environment variable requires the redeployment of the application infrastructure stack.

Wouldn’t it be nice to decouple application configuration from infrastructure configuration and code? This is where AWS AppConfig (a component of AWS Systems Manager) can help.

AWS AppConfig in a Nutshell

Overview

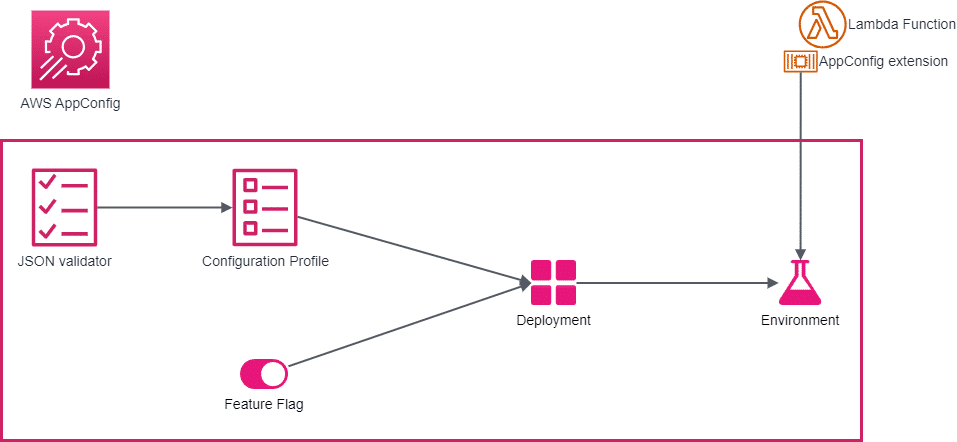

As the name suggests AWS AppConfig is a service to manage and deploy application configuration. It is made of several basic components:

- Configurations

- Feature Flags – Boolean flags to turn on or off features in environments

- Configuration Profiles – configurations to set values to parameters in environments

- Environments (e.g., Dev/Test/Prod)

- Deployments (e.g., all at once, linear, canary…)

Lambda Function Integration

AWS Lambda Functions must be provisioned with the AWS AppConfig extension. Essentially, the extension runs a sidecar to your function container. It calls the AWS AppConfig service for you, caches configuration values and provides you with a local URL endpoint for your function handler to retrieve configuration. It is important to note that each function AppConfig extension runs in isolation with other functions and manages its own configuration cache.

Interesting Features

AWS AppConfig provides some interesting features:

- Validators - Configuration Profiles can have JSON specifications or Lambda Functions to validate that configurations meet requirements before being deployed.

- Bake Time – This is a post deployment timer. When Amazon CloudWatch Alarms are configured, AWS AppConfig will monitor for alarms for the duration of this timer. If alarms are raised, it will roll back the configuration to the previous version in that environment.

- Cache – The AWS AppConfig extension has a local cache. The refresh timeout can be controlled using an environment variable. The default is 45 seconds. It is set to 30 seconds in the demo.

Configuration Sources

Configuration can come from various sources. At the time of writing, the list of supported sources is:

- AWS AppConfig hosted feature store

- AWS Systems Manager Parameter Store

- AWS Systems Manager Document

- AWS Secrets Manager

- Amazon S3 Bucket

- AWS CodePipeline (to deploy configuration stored in Git Repositories)

Costs

You are charged each time:

- the AWS AppConfig extension requests configuration data from AWS AppConfig via API calls,

- each requesting targets (e.g., Lambda Functions) receive new configuration data.

|

Configuration requests via API Calls |

$0.0000002 per configuration request |

|

Configurations Received |

$0.0008 per configuration received per target |

As mentioned in the AWS pricing page1, assuming you have one application configuration that updates three times a day and 2,000 AWS Lambda Functions requesting configuration data every two minutes, the total monthly cost will be $152.64, which is about 51 cups of coffee at $3 a cup.

The Demo

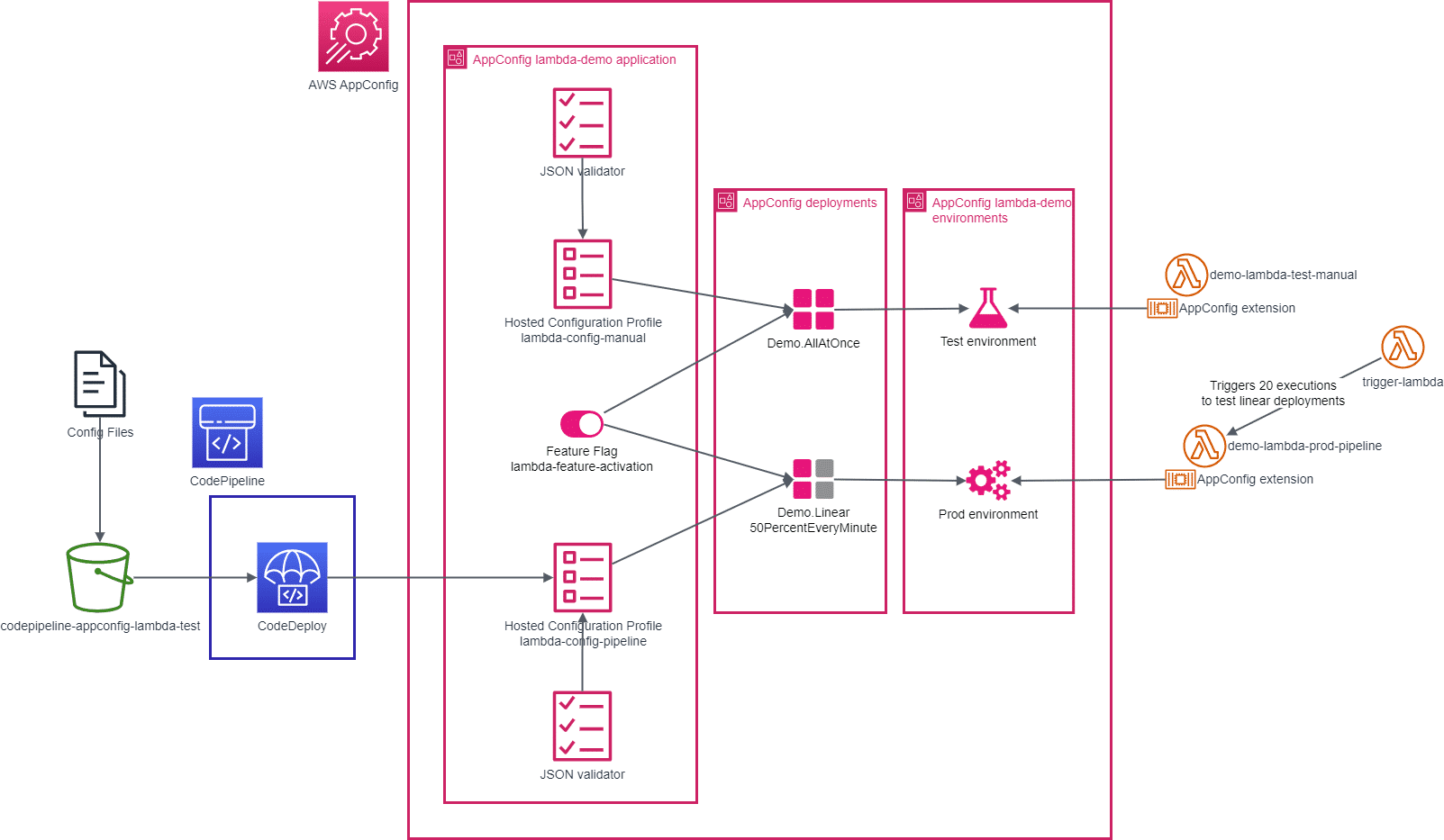

I have released a demo with instructions in this GitHub repository. With this demo you can:

- See Lambda infrastructure configuration (e.g., ARM architecture in test vs x86 in prod) in Terraform and application configuration managed through AWS AppConfig

- Test application configuration and feature flags in 2 different environments (test and prod)

- Deploy configuration from 2 different sources (the AWS AppConfig store in test and AWS CodePipeline in prod)

- Test two different deployment scenarios (all at once2 in test and 50% linear3 in prod)

To allow all those tests, the demo has 2 environments , Test and Prod. In both environments there is an AWS Lambda Function with the AWS AppConfig extension and a cache timeout of 30 seconds. The AWS Lambda Functions are deployed with different architectures (ARM in Test and x86 in Prod). This illustrates the decoupling of the application infrastructure from the application configuration. The application hosted by these functions perform matrix computations, having two configuration parameters:

- A feature flag to activate or deactivate additional matrix operations.

- A configuration parameter to control the matrix sizes.

In the Test environment, the configuration is pulled from an AWS AppConfig hosted configuration and the deployment is done “all at once.” Whereas in Prod, the configuration comes from AWS CodePipeline. For the sake of simplicity, the source of the configuration files is set to an S3 bucket instead of a code repository. The Prod environment uses a 50% linear deployment strategy.

Another AWS Lambda Function tests the linear deployment in the Prod environment. It performs 20 parallel synchronous invocations of the application. If you change the matrix_size parameter in the prod configuration file and perform these steps:

1. trigger the test function to cache configuration

2. then push a new version of the configuration file which will trigger the pipeline and the Prod environment deployment

3. re-trigger the test function within 30 seconds after the deployment is started.

4. re-trigger the test function after 30 seconds but before the end of the deployment (within 60 seconds)

5. re-trigger the test function sometime after the deployment has finished

You will see in the CloudWatch logs4 the matrix_size parameter changing for the 20 AWS Lambda Function executions.

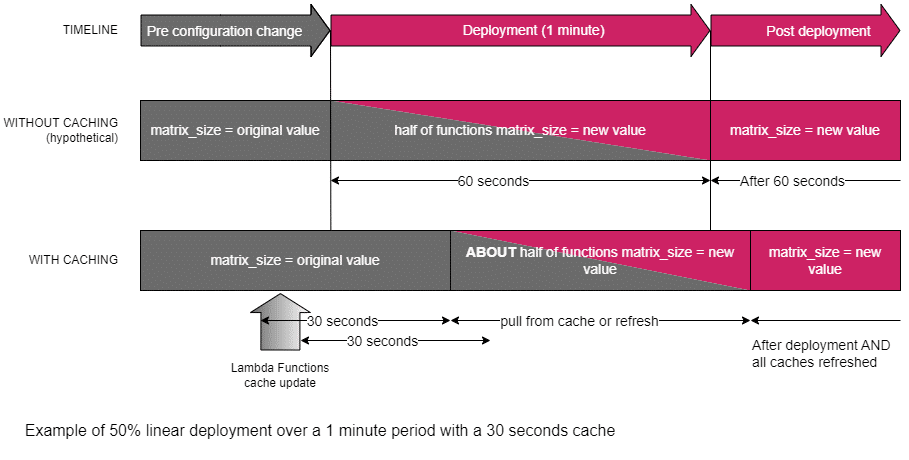

Furthermore, the configuration changes do not percolate linearly into the 20 AWS lambda functions. I.e., you will not get immediately and exactly 10 AWS Lambda Functions with the old value and another 10 with the new value.

Why?

Hypothetically, without caching, the AWS Lambda Function would always query the AWS AppConfig service. In this demo, during the deployment of the configuration the AWS AppConfig service answers half of the time with the old value and half of the time with the new value. As a result, the application would use half of the time the old configuration and half of the time the new configuration.

But the AWS Lambda Function AppConfig extension caches by default (30 seconds in the demo), and you also don’t control which Lambda Function will answer a request. This has 4 impacts:

1. Even once the deployment has started, if all AWS Lambda Function AppConfig extensions still have a valid cache, all executions will still use the cached configuration values 2. Once all function extensions start to refresh their cache, depending on refreshing time and which function is executed, you will not get the exact percentage of old vs new values 3. After the deployment is finished, if some function extensions are still caching the old value, these function executions will still use it. Only once the deployment is finished and all functions have refreshed their cache will the application fully use the new configuration. 4. If alarms are raised during the bake time and the configuration is rolled back, depending on the cache configuration, it will take some time for the rollback to be complete.

In short, the deployment strategy (50% over a 1-minute duration in the demo) controls the configuration value returned by the AWS AppConfig service. But due to caching and function scheduling, the rate at which the AWS Lambda Functions will use the updated configuration will vary.

Conclusions

AWS AppConfig is a very useful service to decouple the management and deployment of application configuration from your infrastructure. Depending on the volume and scheduling of your application’s AWS Lambda Functions, the AWS AppConfig extension cache timer you configure and the deployment strategy you use, the rate at which application configuration will propagate will vary. Costs will also vary based on those factors and how often you update your application configuration.

1 AWS AppConfig pricing https://aws.amazon.com/systems-manager/pricing/#AppConfig

2 All-at-once deployment https://docs.aws.amazon.com/whitepapers/latest/introduction-devops-aws/all-at-once-deployments.html

3 Linear deployment https://docs.aws.amazon.com/whitepapers/latest/introduction-devops-aws/linear-deployments.html

4 CloudWatch Logs https://docs.aws.amazon.com/lambda/latest/dg/monitoring-cloudwatchlogs.html